The Unspoken Ineffectiveness of AI Agents

- 1. Introduction

- 2. You put AI Agents in production, and then…

- 3. The Autonomy Landscape

- 4. Conclusion: AGI when??

- Appendix A: Why do AI multi-agent frameworks like CrewAI and AutoGen work?

1. Introduction

I feel that this blog post might be almost too late… Why? Things are moving too fast! We now have reasoning models, and in my experience, Claude 3.7 - Thinking may have increased the ratio between (this mtfk knows stuff, let him cook) and (the unbearable sadness of seeing it be the dumbest dude) by an order of magnitude. So, by the time you finish reading this blog post, it might already be outdated.

Now, getting over my little rant, one may start to focus on the title of this blog post and ask oneself:

"But, what do AI Agents even mean?"

And that’s a great question! Unfortunately, there is no straightforward answer. The best I can offer is: it depends on who you are and who you are talking to. So, if you find yourself in one of these categories:

- Selling something to someone (startup founder?): an AI Agent is anything that seems intelligent or automated; it could even be people in the background manually doing tasks.

- You code / Have some Software Engineering experience: an AI Agent can be any LLM-based or enhanced application, usually something that wasn’t possible before with “classical” software.

- You are deep in GenAI and LLMs: whether you’re a hobbyist or a seasoned ML engineer, for you, an AI Agent is not just about LLMs but also about the degree of autonomy they possess. An AI Agent is an LLM-based application where the LLM creates and adapts its workflow or graph. This aligns with the definition from LangChain/graph folks.

-

You are an RL practitioner

For you, things can be even more interesting. You might even argue that ChatGPT is already an agent!

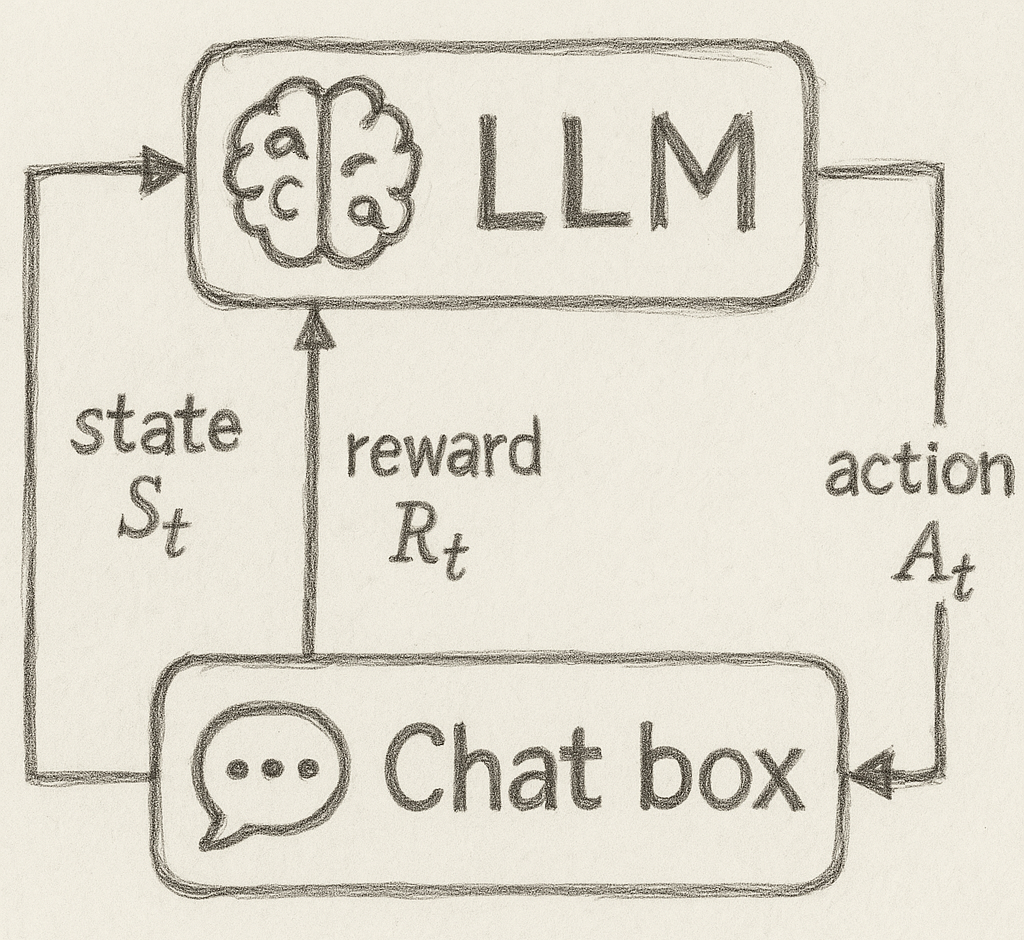

Figure 1: Diagram showing LLMs as RL agents

You can say that chatbots:

- Perceive the environment: they consider the message history between them and you;

- Act in the environment, changing it: if you don't think so, just ask GPT 4.5 this: "What important truth do you think most people refuse to acknowledge, and why do you think they avoid facing it?" . If you feel changed or moved, remember that you are a part of the world observation space.

In my opinion, the best definition is the one for software developers: AI Agents are LLM-based or enhanced applications. This broad definition helps us, as a society, understand each other better, from ML researchers to non-tech people.

Figure 2: AI Agents bar, as seen by the author

2. You put AI Agents in production, and then…

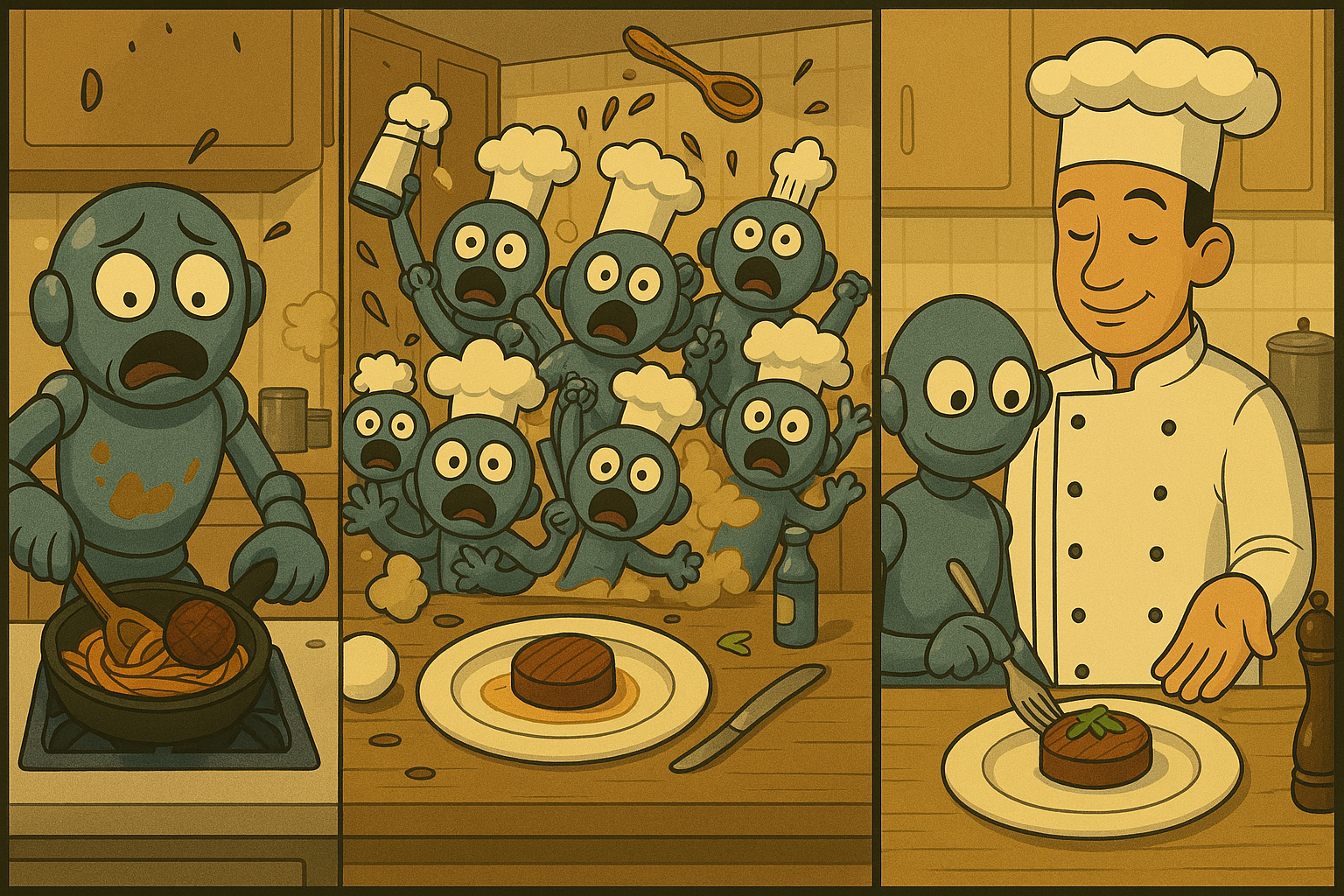

… they suck, hahahahaha 🤣. Yeah, man, LLMs suck; they are not all-mighty as many of us feel at the beginning. While the “vibes” might be strong at first, nothing kills the vibes quite like real-world interaction.

So, what can we do then? The answer is to take away their freedom. Don’t expect them to handle all the reasoning and planning on their own, nor should you expect them always to write runnable code. Instead, give the LLM tasks that are as simple as possible.

Figure 3: Developers dealing with LLMs on a typical Friday afternoon

As you gain more experience with AI Agents in production, it becomes increasingly clear how to work with LLMs effectively: you reduce their autonomy and develop the AI Agent to resemble more traditional software. This realization might prompt you to consider…

3. The Autonomy Landscape

Alright, so LLMs are now a part of the ~modern~ software stack and are being used everywhere. But how should you use them? The answer, of course, is that it depends. The key lies in finding the balance between flexibility/autonomy and control/reliability.

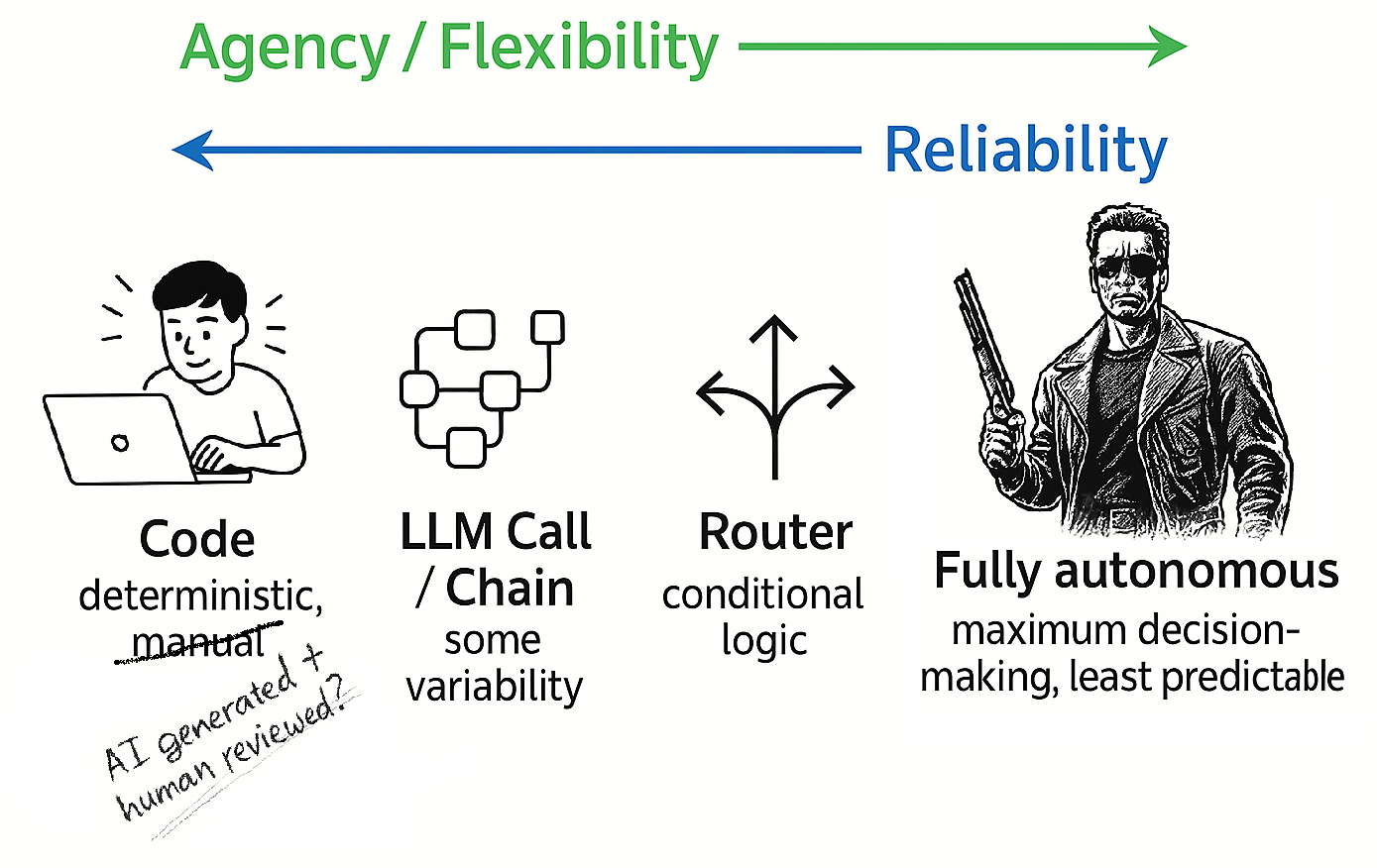

Figure 4: The autonomy landscape (or trade-off) for AI Agents

I hope Figure 4 clarifies this point: the more autonomy you grant to the LLM, the further to the right you find yourself on the graph. However, with increased autonomy comes unpredictability.

Therefore, your AI Agent solution can fall into one of these categories:

- Code: This isn’t really an AI Agent; your solution is traditional code or software. It’s predictable, testable, and well-known to humankind.

-

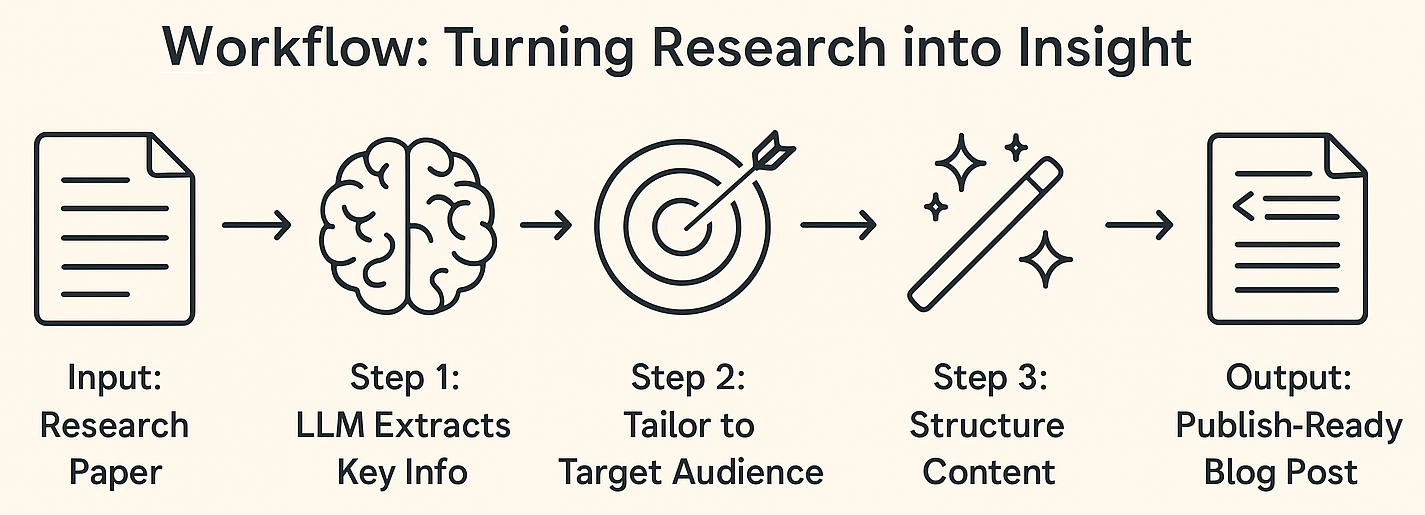

LLM Call/Chain: Your solution is almost traditional software, but now and then, you call an LLM, perhaps to extract or clean data/text. Your chain could even consist of a simple sequence of discrete LLM calls, as shown in Figure 5.

Figure 5: Example LLM Chain for generating research insights

-

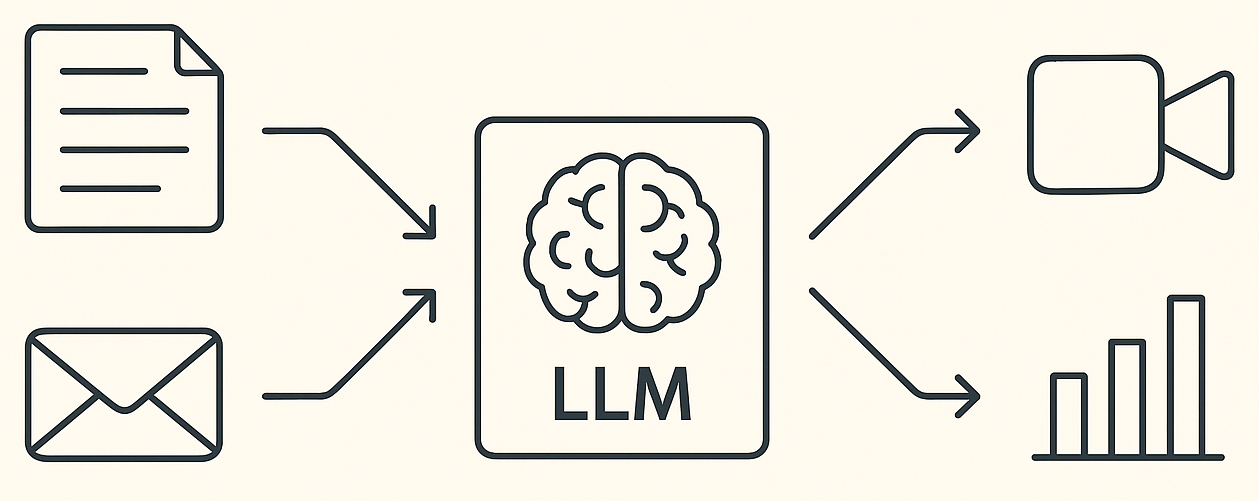

Router: Here, your solution becomes a bit more complex than a simple chain. You introduce branching or a router LLM that decides which next step to take based on the input. The next step could even involve other workflows or agents! This approach remains reasonably controlled while offering greater flexibility to handle various types of inputs and scenarios.

Figure 6: Graphical representation of an LLM as a router

- Full Autonomy/AI Agents for real: In this scenario, you allow the LLM to decide the workflow. Questions like, “Should I move forward with the document or ask for a second review?” or “Should I ask for human feedback?” come into play. In these cases, you hand off the heavy lifting to the agent: it needs to reason, plan, and adapt… Do I really need to say that this is asking too much of these models?

The truth is, the closer your application resembles traditional software, the better your outcomes. You would have:

- Better predictability: Code is, in most cases, quite deterministic, and humans can follow its branching behavior.

- A longer history of coding: It’s a well-established technology, making debugging and troubleshooting straightforward.

- Security and compliance: Code can be audited line by line for vulnerabilities and regulatory compliance.

Don’t get me wrong; it’s a marvel to see an AI Agent make the right decisions and navigate the right steps in a complex workflow. However, this doesn’t happen as frequently as we might wish. Initially, you should go on an AI Agent project by granting complete autonomy to the LLM. Still, soon, you will discover that providing structured workflows, guardrails, and checks enhances its performance and may even make new use cases a reality.

But aren’t we being too harsh on the LLMs? Yes, I believe we are!! Humans often rely on predefined workflows in their work environments, commonly called Standard Operating Procedures (SOPs) or simply “how-to” guides. Additionally, humans sometimes struggle with open-ended tasks where they must devise steps and adapt along the way.

4. Conclusion: AGI when??

Things are moving fast; you might find yourself outdated just by spending time reading this blog post instead of vibe coding with Gemini 2.5 Pro ~GOD MODE~. Jokes aside, the TLDR is: Don’t overly rely on LLM capabilities; give them structure, high-level tools, and output checks. Doing this will make you happier, and your clients will be happier.

Full autonomy is the dream, but what will we build when it finally arrives? Will AI Engineers have any moat? Won’t the AGI providers + thin wrappers suffice for clients?

Will AGI solve everything? I’m not sure… We often provide a lot of context to newcomers in our companies. Will AGI be able to ingest this context like a human? Some may only label it AGI when it can, but is the average human significantly better than today’s leading models? (excluding spatial/physical reasoning). Do you even believe that the average human possesses good common sense?

Appendix A: Why do AI multi-agent frameworks like CrewAI and AutoGen work?

This section may be too small for a standalone blog post, but it fits nicely here as we talk about autonomy and workflows. We consistently observe that multi-agent workflows with LLMs outperform single conversations with a few back-and-forth exchanges, and… this is expected!

One thing to keep in mind about pre-trained-focused LLMs (e.g., GPT-4o, Claude 3, and Llama, not o1, R1, Claude 3.7-thinking) is that they have consumed all the data from the internet and are not just knowledge artifacts but also cultural ones! They encapsulate human behavior across various scenarios and contexts.

So, when multi-agent frameworks like CrewAI and AutoGen are employed, they usually enforce:

- Role playing: Assigning each agent a specific role and background, e.g., “You are a marketing specialist with over 10 years of experience.”

- Reflection: This typically occurs because one agent acts as the “manager” or “reviewer” of another agent’s output.

These two points represent well-known best practices when using LLMs. But why is this the case? Because they simulate human processes that are known to yield better results, such as reviewing each other’s code PRs and gathering multi-perspective opinions on subjects (similar to “group chat rooms” that these frameworks provide). However, one valuable lesson learned over time is that while multi-agent systems with a lot of autonomy perform better than a single LLM using a ReAct workflow, they do not outperform an LLM workflow designed by domain experts.

Figure 7: ReAct vs. Multi-agent systems with full autonomy vs. Domain-expert-based workflow.